We can guide you in setting up AI content moderation tools for your website, app, or internal workflow. While we don’t directly moderate content ourselves, we specialize in helping you implement and integrate trusted moderation solutions that suit your platform’s needs. Whether you’re managing user-generated comments, uploads, forum posts, or support messages, AI-driven tools can flag inappropriate content in real time, reducing the burden.

We support integration with powerful moderation systems like OpenAI’s moderation API, Google’s Perspective API, or custom self-hosted models using open-source libraries. These tools can detect and filter harmful language, hate speech, harassment, spam, and other violations based on your chosen rules and thresholds. They’re scalable, adaptive, and constantly improving ideal for platforms expecting high volumes of interaction.

Integration for WordPress/Web Apps

| Platform | How to Integrate Moderation |

|---|---|

| WordPress | Use plugins like CleanTalk, Akismet, or custom OpenAI moderation plugin |

| Custom Sites | Use JavaScript/PHP middleware to send text/image through moderation APIs before saving |

| Form Plugins | Add moderation to WPForms, ContactForm7 using webhook → OpenAI |

✅ What AI Can Moderate

| Content Type | Examples AI Can Detect |

|---|---|

| Text | Hate speech, abuse, spam, explicit language, scams |

| Images | Nudity, violence, fake content |

| User-Generated Content (UGC) | Comments, reviews, uploads, chat messages |

Choosing the right tool depends on your content type, user base, and risk level. For example, OpenAI’s moderation model is highly effective for text-based platforms, while custom models can be trained for niche use cases or non-English content. We help you assess these options, configure the right setup, and connect it seamlessly with your existing infrastructure whether you’re using WordPress, a custom-built app, or enterprise CMS.

🔧 Moderation Tool Options

🧠 1. OpenAI Moderation Endpoint (Text)

- Detects hate, violence, harassment, sexual content

- Returns scores per category

- Easily integrated into any form, comment box, or chat system

Example Use:

jsonCopyEditPOST https://api.openai.com/v1/moderations

{

"input": "your user-submitted content"

}

📷 2. Google Cloud Vision SafeSearch (Images)

- Flags adult, violent, or racy content in uploaded images

- Ideal for profile photos, marketplaces, uploads

🤖 3. Self-Hosted Models (for full control)

- Use open-source models like:

- HateBERT, Detoxify, Perspective API (text)

- CLIP + NSFW classifiers (image)

📈 Bonus Features You Can Add

- 🔄 Auto-hide flagged comments

- ⚠️ Warning popups to users before submitting

- 📩 Email alerts to admin for manual review

- ✅ Auto-approve clean content

At Wemaxa.com, our role is to bridge the gap between your content policies and the technology that enforces them. By leveraging AI moderation, you can maintain a safe, respectful, and compliant environment without manual review at every step. We make the process streamlined, secure, and tailored to your goals.

MORE LINKS:

AI bots installation

AI automation

ChatGPT integration

Content generation

AI user support

Best CMS for AI

AI in small business

AI newsletters

SEO optimization

AI HELP FOR CONTENT MODERATION

Do You Offer AI Content Moderation Tools?

When it comes to managing a modern digital platform, content moderation has become one of the most pressing challenges facing businesses today. Whether you operate a community-driven forum, a social media network, an e-commerce platform, or even a company blog with an active comment section, the sheer volume of user-generated content can quickly overwhelm human moderators. Manual review is costly, slow, inconsistent, and often demoralizing for staff, particularly when they are exposed to harmful or offensive material. That is why businesses in 2026 are increasingly adopting AI-powered moderation tools as part of their digital infrastructure. These systems are designed to automate the detection of offensive language, inappropriate media, spam, and even subtle patterns of manipulation or abuse. At Wemaxa, we understand that moderation is no longer a secondary issue but a central factor in maintaining trust and user engagement, which is why we offer solutions that integrate advanced machine learning algorithms, natural language processing, and computer vision technologies to keep online communities safe and productive.

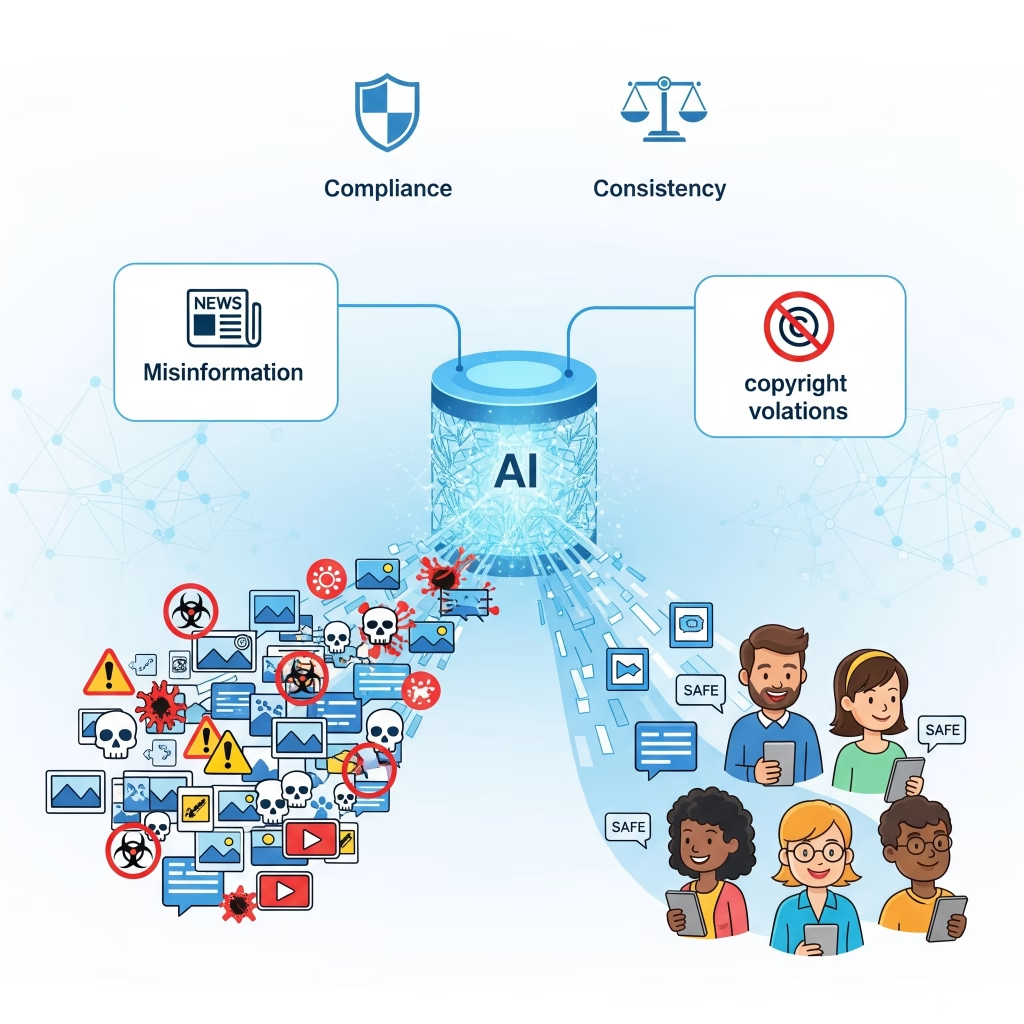

The advantages of implementing AI-based content moderation are significant. Unlike human moderators who may experience fatigue or bias, AI systems can process thousands of posts, images, or videos in real time with consistent standards. This does not eliminate the need for human oversight entirely, but it dramatically reduces the workload while ensuring that harmful or illegal material is flagged almost instantly. In practical terms, this means your platform can remain compliant with evolving digital regulations such as the EU Digital Services Act or online safety frameworks promoted by organizations like OECD, while also improving the overall experience for legitimate users who want to engage without being exposed to spam, harassment, or unsafe material. AI moderation tools can be fine-tuned to reflect your platform’s unique values and policies, which is crucial since not all industries have the same definitions of acceptable content. For example, an online learning portal may prioritize filtering out misinformation, while a fashion marketplace might focus on copyright violations or counterfeit product listings. By aligning the AI’s training data and detection thresholds with your specific goals, businesses can achieve a level of balance that is both scalable and brand-appropriate.

Another critical benefit of AI-powered content moderation is its capacity to evolve alongside user behavior. Malicious actors are constantly adapting their tactics to evade detection, using techniques such as code-switching, slang, image overlays, and coordinated bot activity. Traditional keyword filters or manual review processes cannot keep up with these rapid shifts. However, AI systems that leverage machine learning and deep learning architectures are inherently adaptive. They can identify patterns across massive datasets and refine their detection models over time, making them resilient against new forms of abuse. This proactive defense mechanism helps ensure that your platform does not fall behind competitors who are already leveraging automation. Moreover, the implementation of AI moderation frees human moderators to focus on higher-value cases that require nuance, context, and ethical consideration, such as borderline disputes or complex cultural sensitivities. This hybrid approach combines the efficiency of AI with the judgment of experienced professionals, creating a layered strategy that is far more effective than either method alone.

From a business perspective, integrating AI moderation tools also strengthens brand reputation and user retention. Users who feel unsafe, harassed, or constantly exposed to low-quality spam content are unlikely to return, let alone recommend your platform to others. On the other hand, a secure and well-moderated environment fosters trust, encourages meaningful engagement, and contributes directly to higher customer lifetime value. This has been observed across major platforms like Meta and X (formerly Twitter), where investment in AI-driven moderation is seen not just as a defensive necessity but as a growth strategy. Even smaller businesses can benefit from adopting scaled-down versions of these technologies, which are increasingly accessible through cloud services and SaaS models. In 2026, failing to implement AI moderation is not just a missed opportunity it can be a competitive liability. Regulators, advertisers, and users alike expect a proactive stance on safety, and those who ignore it risk reputational damage and financial penalties.

At Wemaxa, our approach to AI content moderation is rooted in flexibility and integration. We provide tools that can be seamlessly embedded into your existing CMS, e-commerce system, or community management platform, ensuring that you do not need to rebuild infrastructure from scratch. Whether you require automated flagging, real-time filtering, or post-moderation review queues, our solutions are built to adapt to your workflows. More importantly, we advise our clients on best practices for configuring AI systems in a way that respects freedom of expression while upholding clear community guidelines. This involves not just technical deployment but also strategic alignment with your company’s values and legal obligations. By offering these moderation solutions, we aim to empower businesses to create safer, smarter, and more sustainable digital ecosystems. For organizations that want to thrive in the era of user-generated content, adopting AI moderation is not simply an option it is the foundation of digital trust and operational scalability.