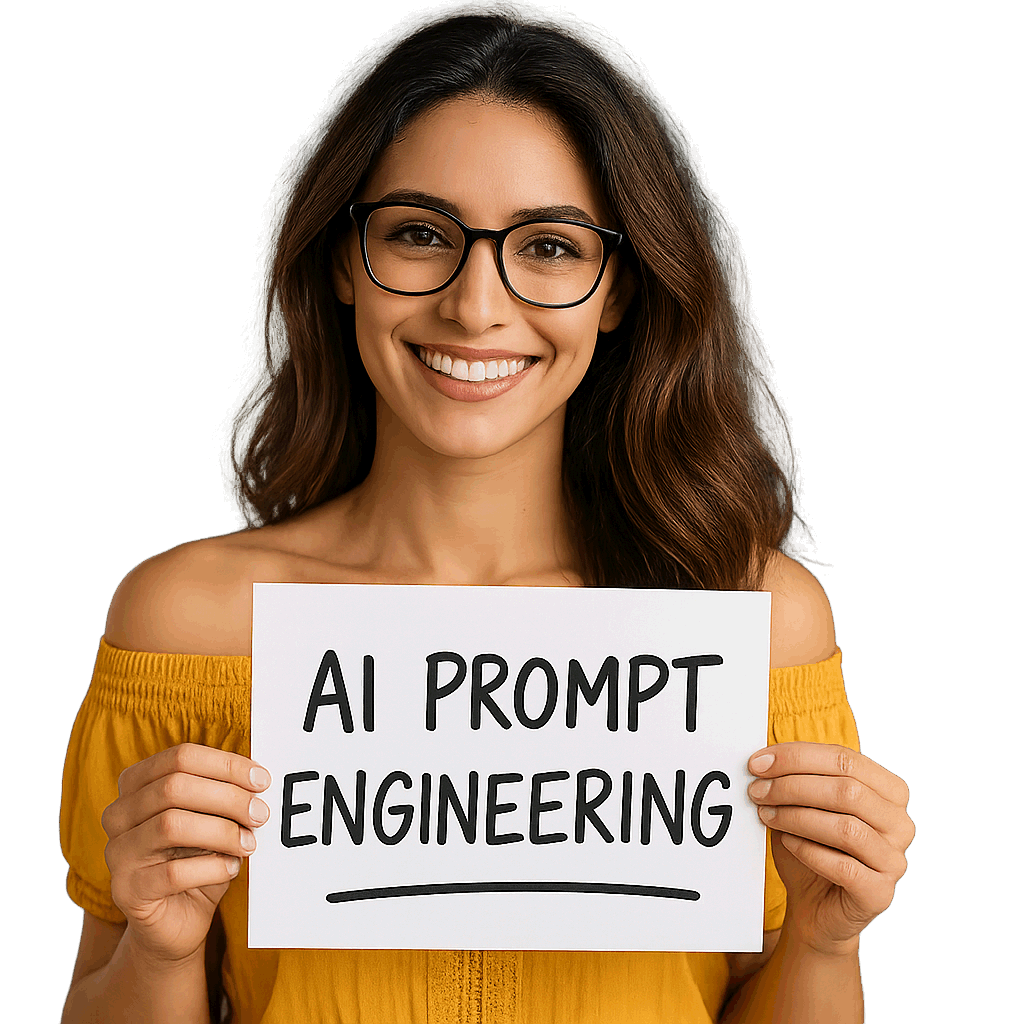

AI prompt engineering is the latest fashionable label in the tech industry, paraded as if it were a brand new science, when in reality it is little more than structured guesswork dressed up with jargon. At its core it means crafting the instructions you feed into a language model so that the system produces useful answers. The machine does not understand in any human sense. It simply reacts to patterns, so the person writing the prompt becomes responsible for guiding that blind pattern matching toward something usable. The rise of prompt engineering is less about innovation and more about the limitations of the tools. If these systems were truly intelligent, they would not require endless tweaking of phrasing to yield acceptable results. The fact that an extra adjective or a reordering of clauses can change the output so drastically exposes how fragile the underlying process really is. What is framed as a creative art is often damage control, compensating for the flaws of a system that only pretends to reason. Practitioners describe techniques such as zero shot, few shot, and chain of thought prompting as if they were breakthroughs. In reality they are workarounds, teaching the model how to simulate examples instead of genuinely understanding the problem. The AI parrots patterns fed into it, so the engineer becomes a stage manager, arranging cues in the right order. It is impressive that such methods can yield coherent results, but it should not be confused with depth of intelligence.

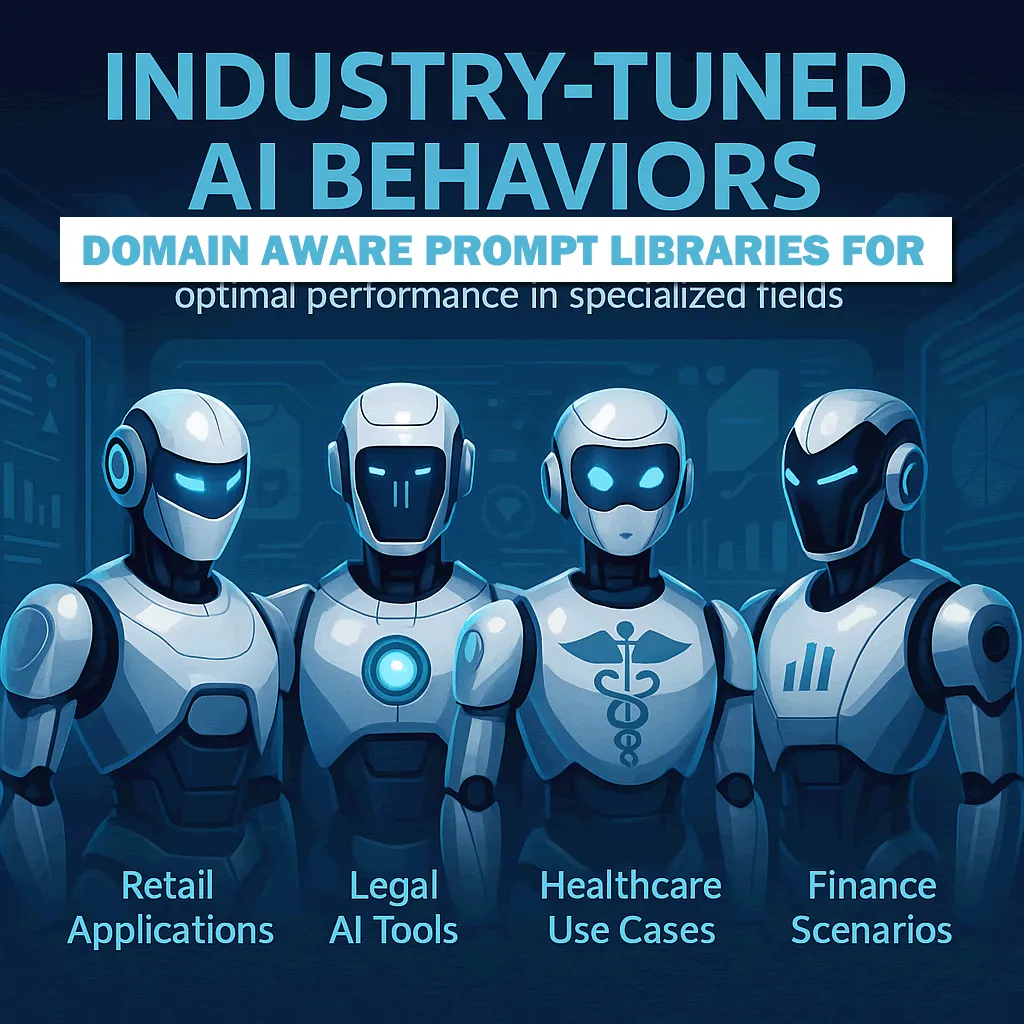

Every industry has its own terminology, logic, and expectations. We develop domain aware prompt libraries that help models perform with clarity and reliability across your most critical tasks.

Retail Applications: Power recommendation engines and product descriptions aligned with your brand tone and audience behavior.

Legal AI Tools: Structure contract interpretation, clause extraction, and compliance reviews.

Healthcare Use Cases: Generate SOAP notes, summarize patient history, or manage triage interactions using clinical language.

Finance Scenarios: Drive SEC report analysis, risk evaluations, and investment summaries with regulatory accuracy.

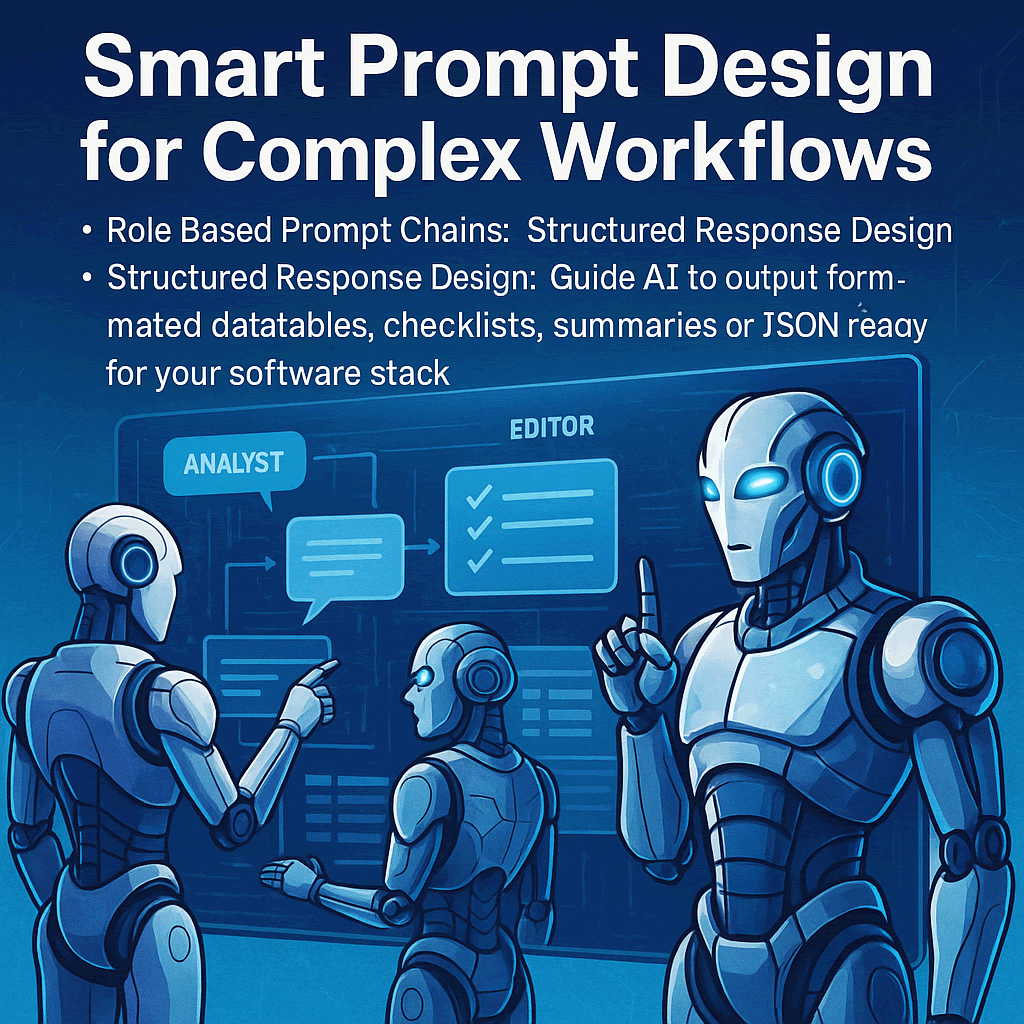

AI doesn’t operate in a vacuum. We design intelligent systems where prompts mimic the way teams think and communicate.

- Role Based Prompt Chains: Orchestrate multi-step outputs where different “AI agents” work together, like an analyst, editor, and presenter.

- Structured Response Design: Guide AI to output formatted datatables, checklists, summaries, or JSON ready for your software stack.

- Multistage Processes: Map out complete support flows, sales conversations, and diagnostics logic, making AI an operational asset.

The business world has of course rushed to monetize this. Courses, certificates, and entire job postings now revolve around prompt engineering. Companies package it as a specialized skill set worth paying for, ignoring the fact that much of it boils down to trial and error with fancy labeling. The profession exists not because the skill is rare, but because the hype cycle demands new titles to justify investment. There is also the question of longevity. As models evolve and interfaces improve, many of the elaborate tricks of prompt engineering will become obsolete. Systems will either be trained to interpret vaguer input more effectively or wrapped in user interfaces that hide the complexity. What is marketed today as a distinct profession may in a few years look like an early awkward phase of interacting with immature tools. In the end AI prompt engineering is both real and inflated. Real, because wording does shape the performance of these systems. Inflated, because the discipline is treated as if it were rocket science when it is often closer to poking a vending machine until it gives you the snack you want. It is a temporary craft born out of imperfect technology, and it will last only as long as the machines remain brittle enough to require human babysitting.

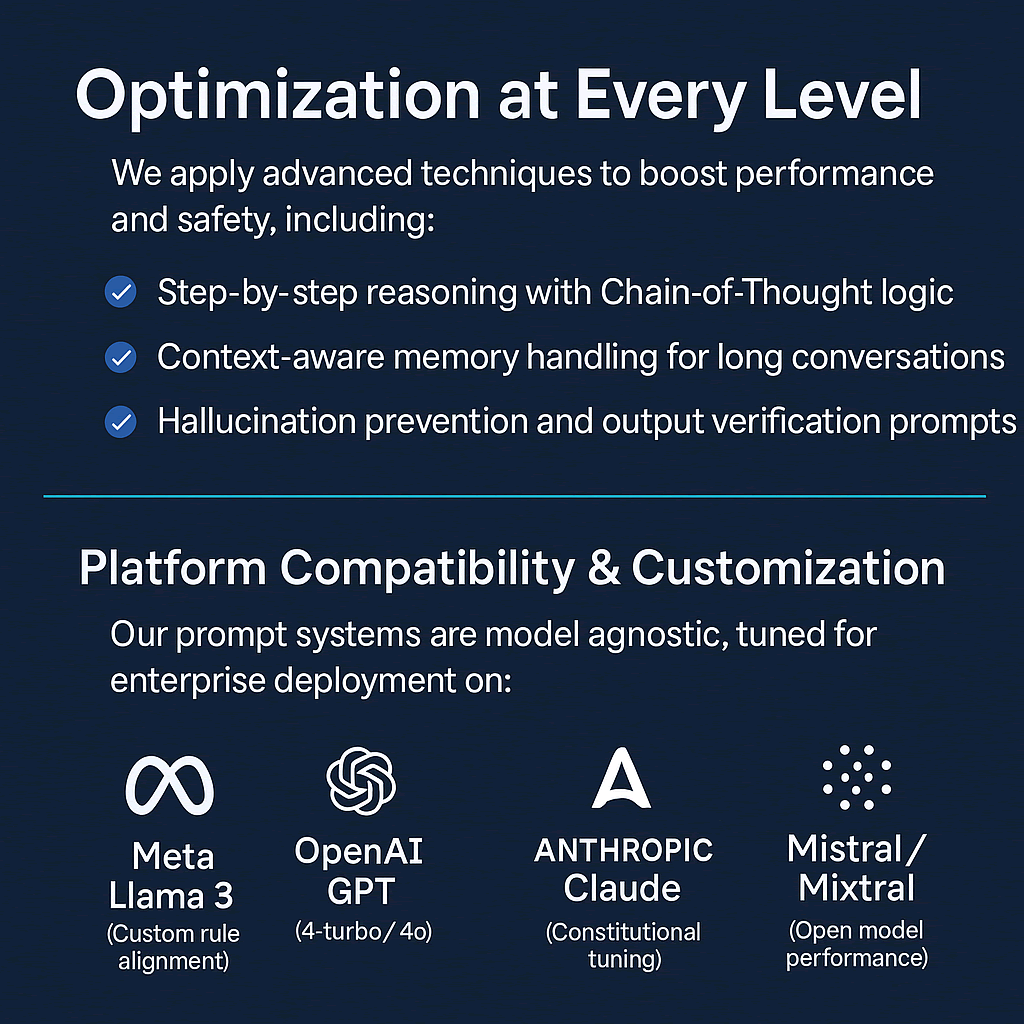

We apply advanced techniques to boost performance and safety, including:

- Step-by-step reasoning with Chain-of-Thought logic

- Context-aware memory handling for long conversations

- Hallucination prevention and output verification prompts

Platform Compatibility & Customization

Our prompt systems are model agnostic, tuned for enterprise deployment on:

Meta Llama 3 (Custom rule alignment)

OpenAI GPT (4-turbo / 4o)

Anthropic Claude (Constitutional tuning)

Mistral / Mixtral (Open model performance)

We go beyond one-time prompt design. Wemaxa provides:

- Version control, benchmarking, and red teaming in development

- Secure API deployment and prompt injection safeguards in production

- Usage analytics, prompt drift detection, and quarterly optimization

Why Wemaxa Prompt Engineering?

✅ Tailored Logic: We design prompts that think like your business

✅ Performance Focus: Every word is tested for clarity, reliability, and results

✅ Secure & Scalable: From dev sandbox to live production

✅ Future-Ready: Ready to integrate the latest AI techniques and models

Behind every powerful AI solution is a precisely crafted conversation. At Wemaxa, we specialize in designing the language that guides large language models to produce accurate, relevant, and structured results. Our prompt engineering services turn generic AI tools into high-performance, business-specific systems that understand your context and deliver real value. We help businesses create smarter, safer, and more effective AI interactions whether it’s fine-tuning model outputs, building internal assistants, or structuring long-form dialogue. From legal teams to product designers, our work supports those who need AI to think, reason, and speak like a domain expert.